Key Takeaways:

- Accurate data interpretation is crucial for making informed decisions in manufacturing, but biases and assumptions can distort insights.

- Data-driven decision-making requires reliable data sources and a clear understanding of the context.

- Implementing robust data analytics tools helps manufacturers avoid misinterpretation and uncover actionable insights.

In 30,000 BC, our ancestors painted stories on cave walls about their daily life, and by 700 BC we had our first printed story—Gilgamesh. Flash forward to 2021, and we have been co-creating stories together with AI for more than half a decade already. But when the order of the day is accurate business intelligence, companies want to rest assured that their artificial intelligence and machine learning systems aren’t taking artistic liberty and spitting back out a tall tale.

This is especially true now that AI and ML-led decision-making is at its highest level of adoption to date and even the most reluctant executives are coming to see the immense benefits to be reaped from a data-centric approach to strategy. As trust builds in the promise of AI and ML, accuracy is critical. The question remains top of mind for many: “How can we tell if our data is telling us the whole story—and one that’s based on facts? What do we need to know to trust in our models?”

Squeaky Clean Data

When a machine learning system offers an inaccurate prediction, we can look to one culprit—the data. Barring black swan events, data is the beginning, middle, and end of any predictive system. It could be that there simply wasn’t enough data to reliably train the system or base its predictions on, in which case more data points can resolve the issue. Usually, something like this would be caught in the early stages of ideating on a model and almost certainly prior to deployment, at least insofar as to prevent wildly inaccurate predictions. The more likely culprit, however, is that the starting data wasn’t “clean” enough. Clean enough is relative. Data requirements for some projects are akin to “Are my sneakers clean enough to go to the supermarket?” Others, especially in critical systems and those which involve life, safety, and wellbeing, are more like “dust-free, spit-shine, parade gloss you could check your teeth in” scenarios when it comes to how clean the data needs to be to reliably (and ethically) perform the task.

So, what makes data dirty? The short story is inaccurate or skewed information.

Broken Tags, Broken Models

This could be improperly tagged data, data that has the wrong info in the wrong field or in an incorrect format, e.g. December 27, 2015 instead of 12/27/15 or 27/12/2015. While the first option might simply throw an error or be ignored, interchanging the last two date formats could cause serious repercussions to accuracy. And dates aren’t all that invalid formatting can affect—times, SKUs, text where numbers should be, numbers where text should be, and all the specialty data streams that come out of shop floor equipment like overrides, alarms, loads, speeds, feeds, etc. The point is that without a reliable and consistent structure, data can become messy and lead to inaccurate insights.

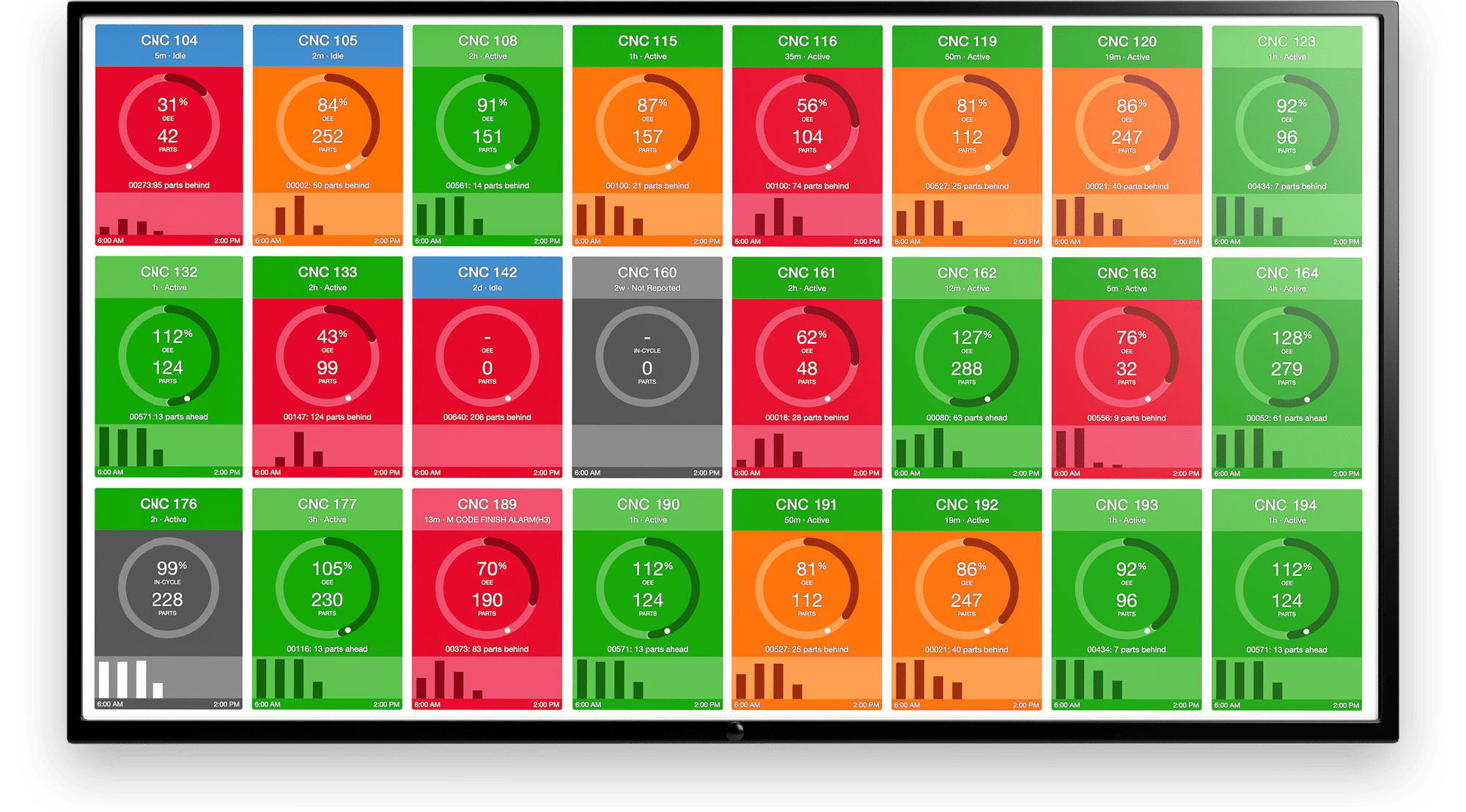

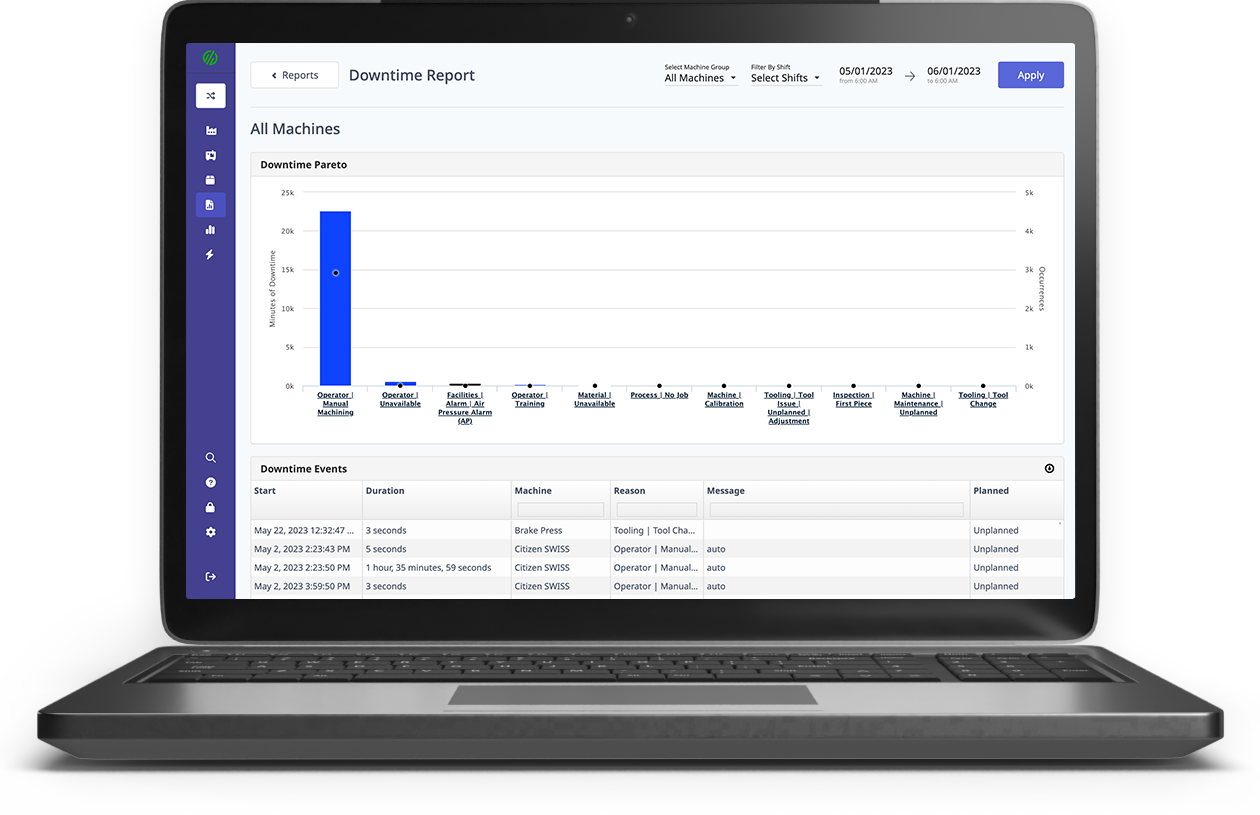

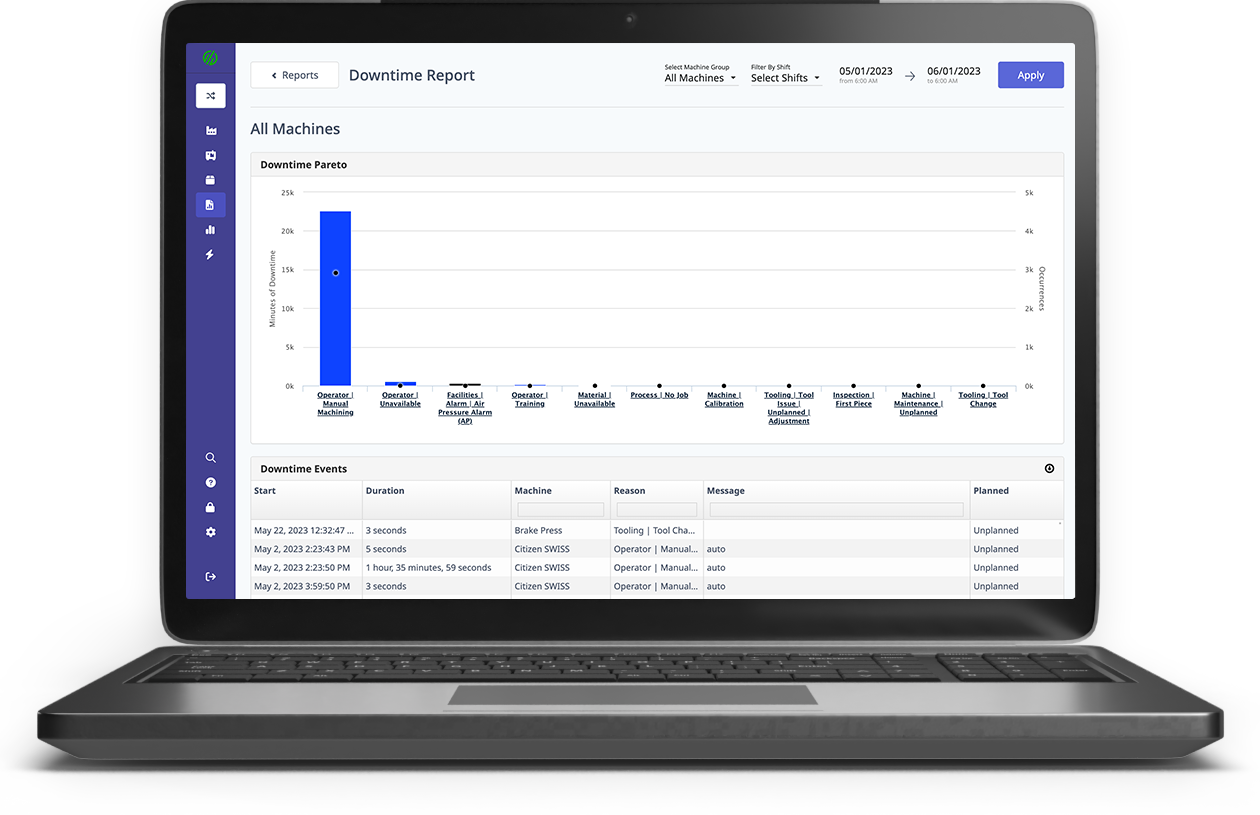

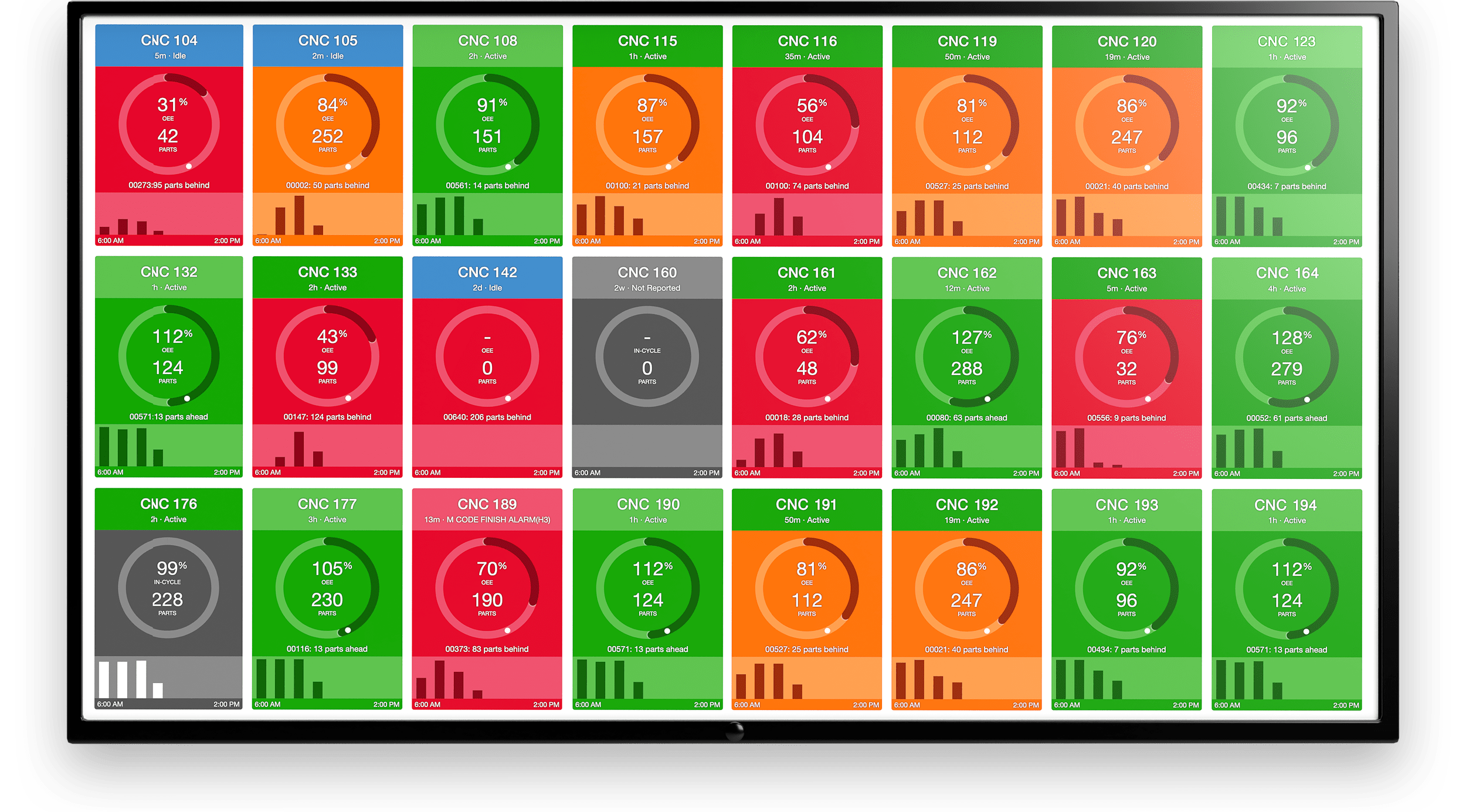

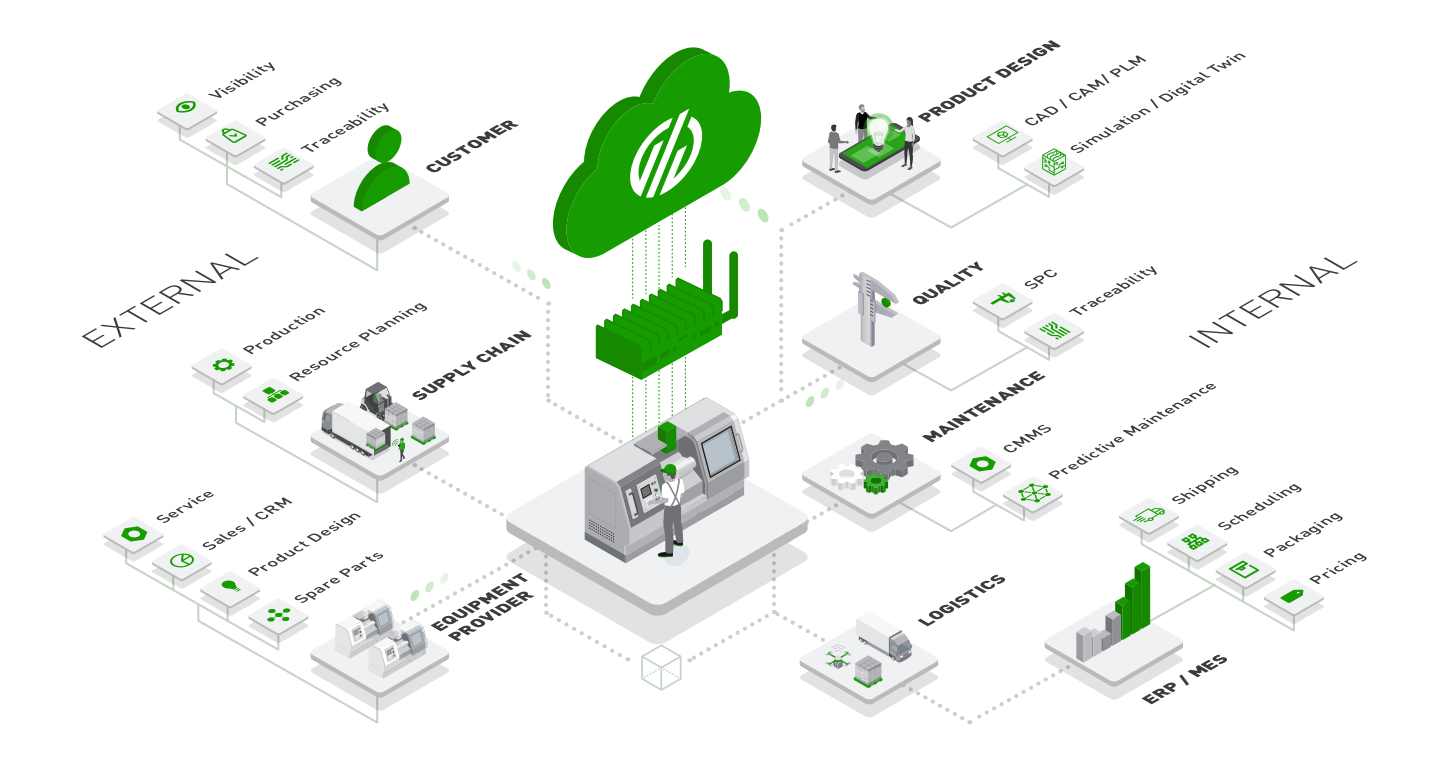

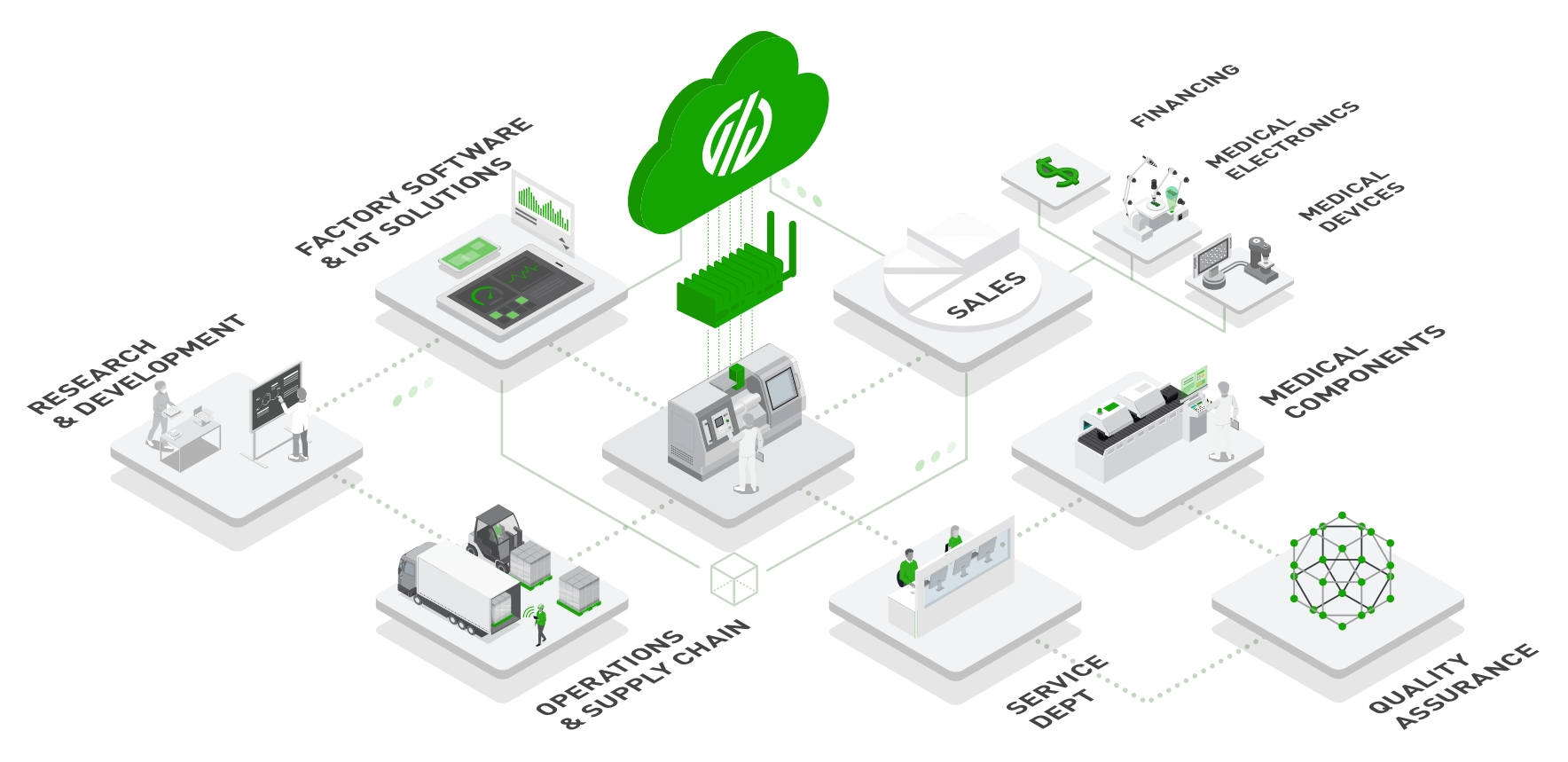

With MachineMetrics, companies are able to take advantage of our automated data transformation engine that standardizes and formats a wide range of data types for easy analysis. It can handle custom sensor values, machine status, modes, alarms, overrides, load, speeds, feeds, PMC parameters, diagnostics, and more.

Want to See the Platform in Action?

Can You Trust Your Sources?

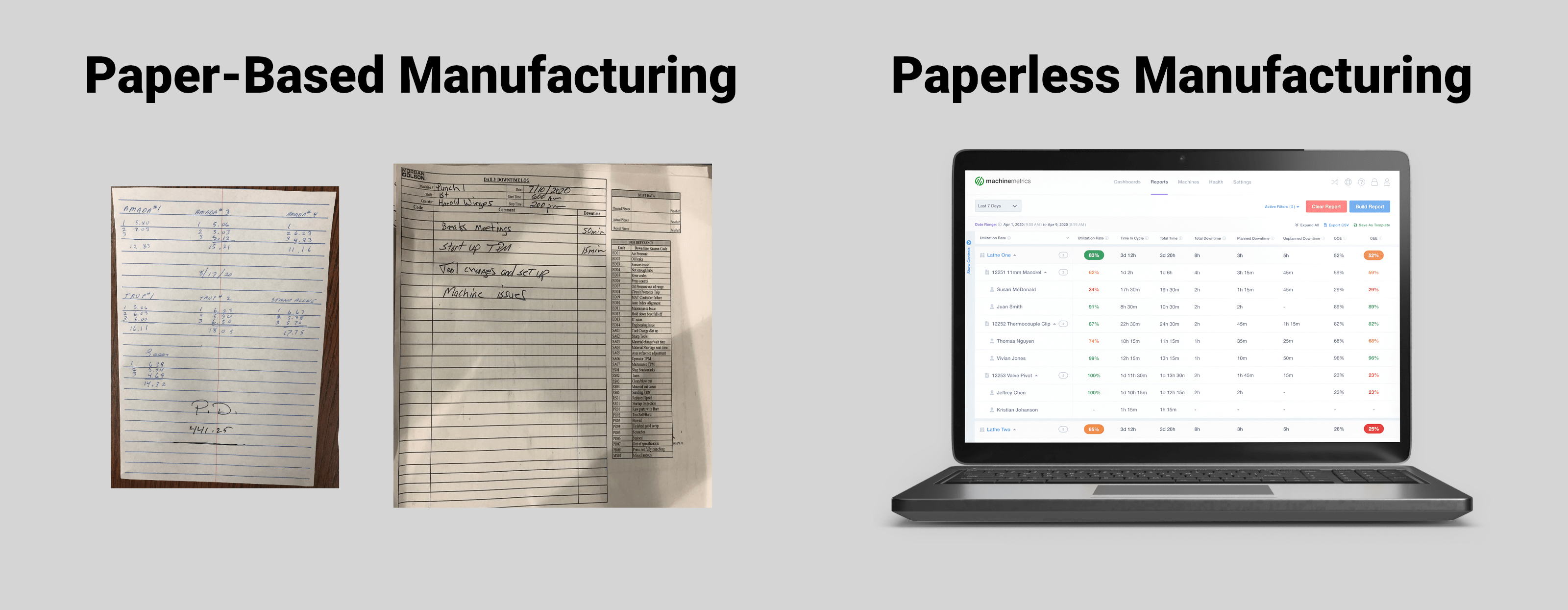

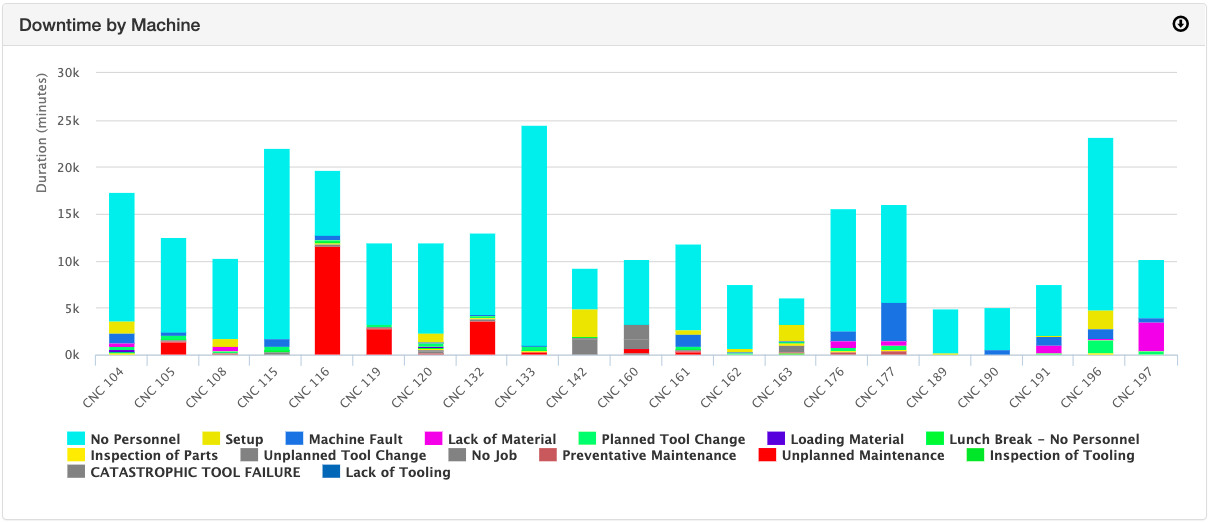

It’s important to consider the veracity and accuracy of any data sources being used as well, especially if those sources are human. Humans aren’t the most accurate creatures. We round and forget and fudge the numbers. We get lazy or tired or hungry or distracted. By nature of using human data sources, data will be dirty in some way or another. Here is where it’s important to look at tolerances. For example, machine operators might be adding data about the state of the equipment they use. When giving information about downtime, the listed reasons may be accurate while the time is more of an estimate. This leads to a less accurate predictive system, as the responses it outputs are also closer to estimates than they might have been if provided truly accurate data.

This is another scenario where realistic tolerances should be considered. Seconds or half-hour intervals? In the case of machine data sourcing, you could even be looking at nanoseconds or smaller for some use cases. No matter what tolerance is deemed reasonable for the project, it's important to enforce that tolerance and ensure any data input to the system falls within those parameters for accuracy.

The MachineMetrics High-Frequency Data Adapter captures machine data at 1kHz (as compared to a standard 1Hz—so 1000x faster) so you never miss a beat, no matter what level of accuracy your application calls for.

This is also where companies should also be looking for bias potential. Lies of omission still lead to fairytale predictions. Are your data sources collecting broadly enough to show the whole picture or are they prone to bias as a consequence of the collection methods used? For example, if you’re trying to figure out the average salary for a production line worker, but all respondents were men, you’re likely going to have a skewed figure vs. if you surveyed both men and women to get a clearer and more accurate picture. Actively seeking potential oversights like this can lead to cleaner data and more accurate predictions.

Context and Complexity

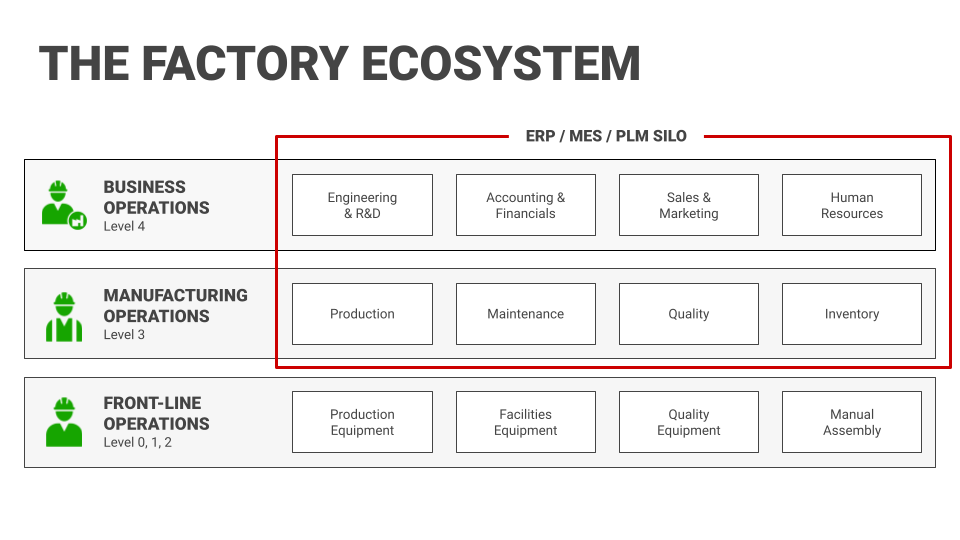

The more complex a system, the more room there is for inaccuracies, inconsistencies, risks, and general breakdowns in the data and logic that underpins it when you try to translate it to something that can be used for analysis. How many steps are there to the process on which you’re trying to collect data? Do you have the industry knowledge to help you give context to the raw data? For example, a machine that “goes down” multiples times within minutes with successful production in between is less likely to be true production runs, rather is indicative of tests to ensure whatever problem initially caused the downtime is fully resolved, re-calibrated, and ready to resume real production. However, leaving these numbers in the data-set as they were input could cause serious inaccuracies that could be difficult to catch to the untrained eye.

This doesn’t mean that your data science team has to be the ultimate expert in everything in your industry. It does mean, however, that having channels where the experts can add context is critical. For example, with MachineMetrics, machine operators are equipped with a tablet device on the shop floor, right on their machinery. They can quickly and easily add human context to the numerical data in order to boost accuracy at the analysis stage. Without this sort of context channel, it can become a guessing game to determine which numbers should be thrown in the pot and which should be discarded.

Lossless Transformations

When you transform one thing into another thing, there’s basically always some degree of loss, however minute—whether that’s transforming ore into steel, cotton into textiles, or data into a format for analysis, it’s important to determine how much loss is acceptable and how much is preventable. Starting with clean, accurate data is a vital first step in a clean and accurate analysis. Beyond that stage, it is necessary to consider which tools you’ll use and for what applications, the likelihood of any loss of fidelity, and to what degree this is acceptable (or irrelevant). This leads directly back into understanding what it is you’re trying to do with your data and with your models—what problems you’re trying to solve—and the level of accuracy required to effectively solve them.

At MachineMetrics, we make sure your data is giving you the whole truth and nothing but. We are industry experts who understand the depth, breadth, and type of data you need to solve your most pressing problems—whether that’s machine downtime, optimizing output, or a myriad of other options—using a data-led approach. We use simple, digestible formats for sharing and analysis that retain their integrity and fidelity, even in real-time. MachineMetrics simplifies the task of integrating shop floor data with real-world decision-making through a tried-and-true process, implemented in plain language and with tools so easy that even non-technical folks can easily DIY install them, for a service that has led our customers to ROI in less than a week. To see what types of questions MachineMetrics can answer for your business or to book a demo, reach out to us anytime here.

Plug-and-play Machine Connectivity

.png?width=1960&height=1300&name=01_comp_Downtime-%26-Quality_laptop%20(1).png)

Comments